Bankopladerne.dk

So I have this “hobby site” called bankopladerne.dk. The main purpose is to expose free bankoplader for everybody to use, but there are also some (hidden) features only available to paying customers.

The “backend” primarily serves PNG’s and PDF’s based on the users needs and there are also “play and check” facilities both for the free boards and for paying customers where the paying customers gets access to their specific boards using a link with an encoded expiring access key.

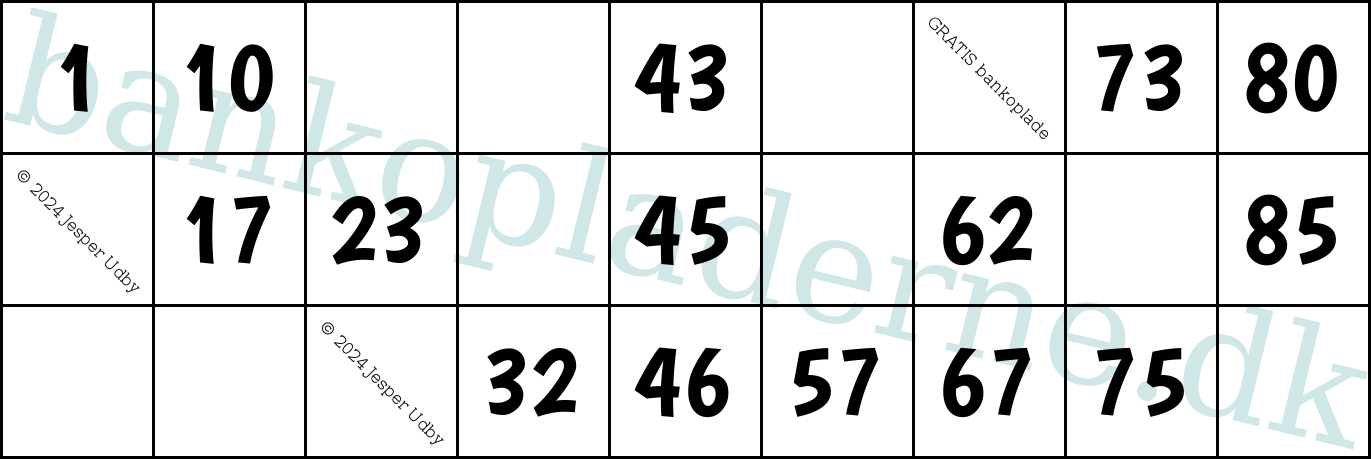

By the way, a “bankoplade” is a “board” for playing the English version of Bingo: A 9×3 matrix where each column contains 1..3 numbers (increasing downwards) and each row contains exactly 5 numbers, numbers are in the range 1..90 where 1..9 is in the first column, 10..19 in the second … and 80..90 in the last column… Like:

The architecture is quite simple: The static parts run on a custom made AWS EC2 instance primarily with nginx and doing the front-end SSL certificate handling.

And the “backend” (which this blog entry is about) is a spring-boot application serving all the non-static parts.

The spring-boot application has 3 different levels of security:

- None for the free parts

- Expiring API keys for paid services

- Simple OAuth2 JWT for back-office UI

The backend runs in an AWS Elastic Beanstalk container.

Why?!

- Spring-boot was chosen as a simple integration framework when I created the setup in 2016

- AWS Elastic Beanstalk was chosen in 2016 because it was an almost one-click to get your java application running in the cloud

Log4Shell

- Was it hit by the log4shell feature/defect/attack? yeah, well, kind-of.

- Was it damaging? No, there is no LDAP or other relevant lookups in the infrastructure.

- Did someone try to break in? According to the logs, yes, no success.

- Was it fixed? Yes, as soon as possible…

- Was Log4j to blame? In my opinion: YES. A logging framework should be focused on logging, only doing very simple interpolations.

April 2024

So the frontend (bankopladerne.dk) and the backend (the aforementioned AWS Elastic Beanstalk deployment) had not been touched for real since 2022. Only pricing and some other minor text-only changes had been made.

The backend was running JDK11 and spring-boot 2.7.6…

In November 2023 I had attempted an upgrade from spring-boot 2.7.x to 3.0.x with little success.

I used a few guides from the internet only to realize that my security setup was non-standard in ways that made upgrade guides almost useless and just plain gave up. There were no immediate issues in the ancient setup, no-one seemed to have been able to breach it and make a havoc… And I was too busy in my daytime job to be able to find the time to dig into the issues.

So, nothing here to see, move on 🙂

Testing…

Test coverage of the implementation in general leaved a lot to be desired…

As a fact, upgrading an application with good test coverage and you only have to focus on the areas failing.

Upgrading an application with poor coverage and you might get issues here and there but you cannot trust an “all green lights” since areas not covered will most definitely blow up when hit by reality.

The plan…

So I needed a plan:

- Upgrade JDK to latest covered by the infrastructure (that is from JAVA 11 to 21)

- Introduce testing (unit- and integration- tests) to cover at least the most important components and flows

- Upgrade spring-boot to latest (3.2.4) and all other related dependencies to latest or whatever is recommended by spring-boot (spring-boot-dependencies bom)

- Fix compile errors

- Improve code in general, going from JAVA 11 to 21

Step 1 was easy, and you might ask, why not go straight to JAVA 22. But at the moment AWS Elastic Beanstalk only supports JAVA 21 and I am quite content with that.

Step 2 actually took quite at long time… “The cobbler’s children have the worst shoes” and my setup had almost no test coverage, and particularly the security related stuff was untested.

So upgrading and fixing compile errors might resolve in a solution with everything secured with no-one (inaccessible) or left without security at all, none of which are really acceptable.

Also there are a few flows where different security infrastructure components needs to work together in order to make ends meet. These flows needs testing as well…

It took me like almost 2 working days of time to complete testing in these areas, most massaging endpoints with relevant success- and failure- scenarios (using @MockMvc and setting up @WebMvcTest tests) like below:

@Test

void consentCheck_noCookie_returnsFalse() throws Exception {

mockMvc.perform(get(CookieConsentCheckController.BASE_PATH))

.andExpect(status().isOk())

.andExpect(content().string(containsString("false")));

}And while implementing unit- and integration- testing one always finds minor defects in the weirdest places, often in situations where the solution is so obvious that you do not need testing. The complex areas are always in better shape since the developer is really paying attention.

Step 3..4 was actually just ignore the migrations guides, go a step back, read up on your homework and implement accordingly.

Having good test-coverage in all relevant security scenarios (including the negative tests) helped ensuring nothing important was broken (Eg allowing everybody into secured areas or disallowing anonymous from shared areas etc).

Step 5:

Every line of code was considered to be upgraded:

- Is it actually used (if not, delete it)

- If it is not tested, either add test or remove it…

- Some for-loops was converted to streams

- Introduce records where appropriate

- Do any possible validation in the compact constructor

- Prefer

"stuff with interpolations".formatted(parameters)over String.format("stuff with interpolations", parameters)

Most important was the transition to use java records where possible and implementing a Data Oriented Programming approach where a record of data is immutable and always valid (In a few places the entire state is not known immediately and so eg a MutableInt was introduced to hold the state of an integer which can only be set once, inspired by the Mutable discussion).

While doing this, every validation needs testing which actually revealed a few defects of the “off by one” type.

In general the observation was that the simpler the code, the bigger risk of it having defects. It is obvious that the developer takes extreme care when stuff is slightly complicated, but really does not care when implementations are simple.

Eg, what is the problem with this implementation (creating an array of integers given an array of (positive) byte values)?:

public static int[] asIntArray(byte[] numbers) {

return IntStream.range(0, numbers.length)

.map(i -> numbers[i])

.toArray();

}Done for now…

So the software has been upgraded to use latest JAVA features (with a few exceptions) and latest dependencies.

There are no visible changes to the users.

But the code base is in a much better shape primarily because of test-coverage. Next upgrade from spring-boot 3.2.x to 4.something is going to be so much simpler 😀

No, probably not. A final word to anyone producing or sponsoring software-based solutions: One might think that when it is delivered (and hopefully accepted, FAT) it is done! Well not done, but when all defects are fixed then it is done! BUT NO…

Software is not different from any other product. You invest in it being created. You invest in its maintenance. And you plan for (and invest in) its retirement.